The Quick Python Book

Part 4

Working with data

In this part, you get some practice in using Python, and in particular, using it to work with data. Handling data is one of Python’s strengths. I start with basic file handling; then I move through reading from and writing to flat files, working with more structured formats such as JSON and Excel, using databases, and using Python to explore data.

These chapters are more project oriented than the rest of the book and are intended to give you the opportunity to get hands-on experience in using Python to handle data. The chapters and projects in this part can be done in any order or combination that suits your needs.

20 Basic file wrangling

This chapter covers

- Moving and renaming files

- Compressing and encrypting files

- Selectively deleting files

This chapter deals with the basic operations you can use when you have an everincreasing collection of files to manage. Those files might be log files, or they might be from a regular data feed, but whatever their source, you can’t simply discard them immediately. How do you save them, manage them, and ultimately dispose of them according to a plan but without manual intervention?

20.1 The problem: The never-ending flow of data files

Many systems generate a continuous series of data files. These files might be the log files from an e-commerce server or a regular process; they might be a nightly feed of product information from a server; they might be automated feeds of items for online advertising or historical data of stock trades; or they might come from a thousand other sources. They’re often flat text files, uncompressed, with raw data that’s either an input or a byproduct of other processes. In spite of their humble nature, however, the data they contain has some potential value, so the files can’t be discarded at the end of the day—which means that every day, their numbers grow. Over time, files accumulate until dealing with them manually becomes unworkable, and the amount of storage they consume becomes unacceptable.

20.2 Scenario: The product feed from hell

A typical situation I’ve encountered is a daily feed of product data. This data might be coming in from a supplier or going out for online marketing, but the basic aspects are the same.

Consider the example of a product feed coming from a supplier. The feed file comes in once a day, with one row for each item that the business supplies. Each row has fields for the supplier’s stock-keeping unit (SKU) number; a brief description of the item; the item’s cost, height, length, and width; the item’s status (in stock or back-ordered, say); and probably several other things, depending on the business.

In addition to this basic info file, you might well be getting others, possibly of related products, more detailed item attributes, or something else. In that case, you end up with several files with the same filenames arriving every day and landing in the same directory for processing.

Now assume that you get three related files every day: item_info.txt, item_ attributes.txt, and related_items.txt. These three files come in every day and get processed. If processing were the only requirement, you wouldn’t have to worry much; you could just let each day’s set of files replace the last and be done with it. But what if you can’t throw the data away? You may want to keep the raw data in case there’s a question about the accuracy of the process, and you need to refer to past files. Or you may want to track the changes in the data over time. Whatever the reason, the need to keep the files means that you need to do some processing.

The simplest thing you might do is mark the files with the dates on which they were received and move them to an archive folder. That way, each new set of files can be received, processed, renamed, and moved out of the way so that the process can be repeated with no loss of data.

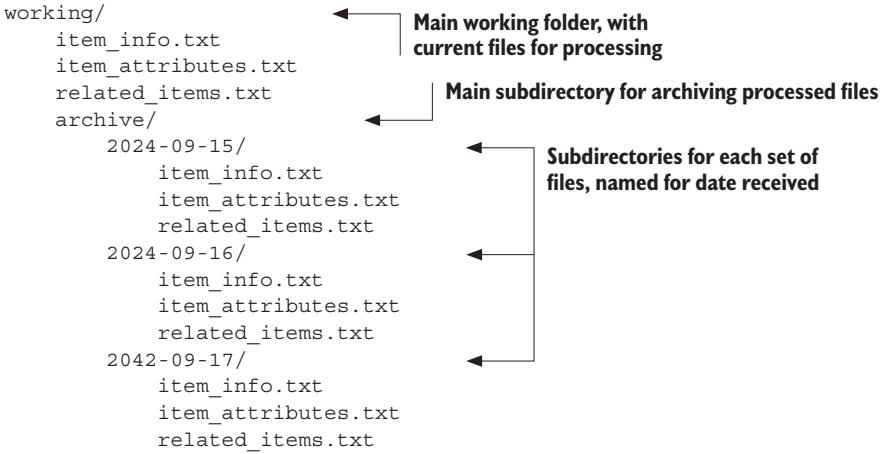

After a few repetitions, the directory structure might look something like the following:

working/ # Main working folder, with current files for processing

item_info.txt

item_attributes.txt

related_items.txt

archive/ # Subdirectory for archiving processed files

item_info_2024-09-15.txt

item_attributes_2024-09-15.txt

related_items_2024-09-15.txt

item_info_2024-07-16.txt

item_attributes_2024-09-16.txt

related_items_2024-09-16.txt

item_info_2024-09-17.txt

item_attributes_2024-09-17.txt

related_items_2024-09-17.txt

...Think about the steps needed to make this process happen. First, you need to rename the files so that the current date is added to the filename. To do that, you need to get the names of the files you want to rename; then you need to get the stem of the filenames without the extensions. When you have the stem, you need to add a string based on the current date, add the extension back to the end, and then actually change the filename and move it to the archive directory.

What are your options for handling the tasks I’ve identified? What modules in the standard library can you think of that will do the job? If you want, you can even stop right now and work out the code to do it. Then compare your solution with the one you develop later.

You can get the names of the files in several ways. If you’re sure that the names are always exactly the same and that there aren’t many files, you could hardcode them into your script. A safer way, however, is to use the pathlib module and a path object’s glob method, as follows:

import pathlib

cur_path = pathlib.Path(".")

FILE_PATTERN = "*.txt"

path_list = cur_path.glob(FILE_PATTERN)

print(list(path_list))[PosixPath('item_attributes.txt'), PosixPath('related_items.txt'),

PosixPath('item_info.txt')]Now you can step through the paths that match your FILE_PATTERN and apply the needed changes. Remember that you need to add the date as part of the name of each file, as well move the renamed files to the archive directory. When you use pathlib, the entire operation might look like the following listing.

Listing 20.1 File files_01.py

import datetime

import pathlib

FILE_PATTERN = "*.txt" # <-- Set the pattern to match files

ARCHIVE = "archive"

def main():

date_string = datetime.date.today().strftime("%Y-%m-%d") # <-- Creates a date string based on today’s date

cur_path = pathlib.Path(".")

archive_path = cur_path.joinpath(ARCHIVE)

archive_path.mkdir(exist_ok=True) # <-- Creates an archive directory if it doesn’t exist

paths = cur_path.glob(FILE_PATTERN)

for path in paths:

new_filename = f"{path.stem}_{date_string}{path.suffix}"

new_path = archive_path.joinpath(new_filename) # <-- Creates a path from the archive path and the new filename

path.rename(new_path) # <-- Renames (and moves) the file as one step

if __name__ == '__main__':

main()The key elements in this script are finding all files that match *txt; creating a date string to add to the filenames, making sure the archive directory exists; and then moving and renaming the files. It’s worth noting here that Path objects make this operation simpler, because no special parsing is needed to separate the filename stem and suffix. This operation is also simpler than you might expect because the rename method can in effect move a file by using a path that includes the new location.

This script is a very simple one and does the job effectively in very few lines of code. In the next sections, you consider how to handle more complex requirements.

Because the preceding solution is very simple, there are likely to be many situations that it won’t handle well. What are some potential problems that might arise with the example script? How might you remedy these problems?

Consider the naming convention used for the files, which is based on the year, month, and day, in that order. What advantages do you see in that convention? What might be the disadvantages? Can you make any arguments for putting the date string somewhere else in the filename, such as the beginning or the end?

20.3 More organization

The solution to storing files described in the previous section works, but it does have some disadvantages. For one thing, as the files accumulate, managing them might become a bit more trouble, because over the course of a year, you’d have 365 sets of related files in the same directory, and you could find the related files only by inspecting their names. If the files arrive more frequently, of course, or if there are more related files in a set, the hassle would be even greater.

To mitigate this problem, you can change the way you archive the files. Instead of changing the filenames to include the dates on which they were received, you can create a separate subdirectory for each set of files and name that subdirectory after the date received. Your directory structure might look like the following:

This scheme has the advantage that each set of files is grouped together. No matter how many sets of files you get or how many files you have in a set, it’s easy to find all the files of a particular set.

How would you modify the code that you developed to archive each set of files in subdirectories named according to date received? Feel free to take the time to implement the code and test it.

It turns out that archiving the files by subdirectory isn’t much more work than the first solution. The only additional step is to create the subdirectory before renaming the file. The following listing shows one way to perform this step.

Listing 20.2 File files_02.py

import datetime

import pathlib

FILE_PATTERN = "*.txt"

ARCHIVE = "archive"

def main():

date_string = datetime.date.today().strftime("%Y-%m-%d")

cur_path = pathlib.Path(".")

archive_path = cur_path.joinpath(ARCHIVE)

archive_path.mkdir(exist_ok=True) # <-- This directory needs to be created only the first time the script is run.

new_path = archive_path.joinpath(date_string)

new_path.mkdir(exist_ok=True) # <-- This directory needs to be created only once, before the files are moved into it.

paths = cur_path.glob(FILE_PATTERN)

for path in paths:

path.rename(new_path.joinpath(path.name))

if __name__ == '__main__':

main()The basic elements of this solution are the same as in the previous one but combined slightly differently. In this case, the date string is used to create a subdirectory in the archive directory and is not added to the filename. Then the file is moved to that directory but not renamed. This solution groups related files, which makes managing them as sets somewhat easier.

How might you create a script that does the same thing without using pathlib? What libraries and functions would you use?

20.4 Saving storage space: Compression and grooming

So far, you’ve been concerned mainly with managing the groups of files received. Over time, however, the data files accumulate until the amount of storage they need becomes a concern. When that happens, you have several choices. One option is to get a bigger disk. Particularly if you’re on a cloud-based platform, it may be easy and economical to adopt this strategy. Do keep in mind, however, that adding storage doesn’t really solve the problem; it merely postpones solving it.

20.4.1 Compressing files

If the space that the files are taking up is a problem, the next approach you might consider is compressing them. You have numerous ways to compress a file or set of files, but in general, these methods are similar. In this section, you consider archiving each day’s data file to a single zip file. If the files are mainly text files and are fairly large, the savings in storage achieved by compression can be impressive.

For this script, you use the same date string with a .zip extension as the name of each zip file. In listing 20.2, you created a new directory in the archive directory and then moved the files into it, which resulted in a directory structure that looks like the following:

working/ # Main working folder, where current files are processed

archive/

2024-09-15.zip # Zip files, each one containing that day's files

2024-09-16.zip # Zip files, each one containing that day's files

2024-09-17.zip # Zip files, each one containing that day's filesObviously, to use zip files, you need to change some of the steps you used previously.

Write the pseudocode for a solution that stores data files in zip files. What modules and functions or methods do you intend to use? Try coding your solution to make sure that it works.

One key addition in the new script is an import of the zipfile library and, with it, the code to create a new zipfile object in the archive directory. After that, you can use the zipfile object to write the data files to the new zip file. Finally, because you’re no longer actually moving files, you need to remove the original files from the working directory. One solution looks like the following listing.

Listing 20.3 File files_03.py

import datetime

import pathlib

import zipfile # <-- Imports zipfile library

FILE_PATTERN = "*.txt"

ARCHIVE = "archive"

def main():

date_string = datetime.date.today().strftime("%Y-%m-%d")

cur_path = pathlib.Path(".")

archive_path = cur_path.joinpath(ARCHIVE)

archive_path.mkdir(exist_ok=True)

paths = cur_path.glob(FILE_PATTERN)

zip_file_path = cur_path.joinpath(ARCHIVE, date_string + ".zip") # <-- Creates the path to the zip file in the archive directory

zip_file = zipfile.ZipFile(str(zip_file_path), "w") # <-- Opens the new zipfile object for writing

for path in paths:

zip_file.write(str(path)) # <-- Writes the current file to the zip file

path.unlink() # <-- Removes the current file from the working directory

if __name__ == '__main__':

main()This code is a bit more complex because now, instead of creating a subdirectory, we are using the date string to name and create a zipfile object. Then the contents of each file in turn are written to the zipfile object and that file is unlinked (or deleted). When the zipfile object goes out of scope at the end of the script, it is automatically closed.

20.4.2 Grooming files

Compressing data files into zip file archives can save an impressive amount of space and may be all you need. If you have a lot of files, however, or files that don’t compress much (such as JPEG image files), you may still find yourself running short of storage space. You may also find that your data doesn’t change much, making it unnecessary to keep an archived copy of every dataset in the longer term. That is, although it may be useful to keep every day’s data for the past week or month, it may not be worth the storage to keep every dataset for much longer. For data older than a few months, it may be acceptable to keep just one set of files per week or even one set per month.

The process of removing files after they reach a certain age is sometimes called grooming. Suppose that after several months of receiving a set of data files every day and archiving them in a zip file, you’re told that you should retain only one file a week of the files that are more than one month old.

The simplest grooming script removes any files that you no longer need—in this case, all but one file a week for anything older than a month old. In designing this script, it’s helpful to know the answers to two questions:

- Because you need to save one file a week, would it be much easier to simply pick the day of the week you want to save?

- How often should you do this grooming: daily, weekly, or once a month? If you decide that grooming should take place daily, it might make sense to combine the grooming with the archiving script. If, on the other hand, you need to groom only once a week or once a month, the two operations should be in separate scripts.

For this example, to keep things clear, you write a separate grooming script that can be run at any interval and that removes all the unneeded files. Further, assume that you’ve decided to keep only the files received on Tuesdays that are more than one month old. The following listing shows a sample grooming script.

Listing 20.4 File files_04.py

from datetime import datetime, timedelta

import pathlib

import zipfile

FILE_PATTERN = "*.zip"

ARCHIVE = "archive"

ARCHIVE_WEEKDAY = 1

def main():

cur_path = pathlib.Path(".")

zip_file_path = cur_path.joinpath(ARCHIVE)

paths = zip_file_path.glob(FILE_PATTERN)

current_date = datetime.today() # <-- Gets a datetime object for the current day

for path in paths:

name = path.stem # <-- Stem = filename without any extension

path_date = datetime.strptime(name, "%Y-%m-%d") # <-- strptime parses a string into a datetime object.

path_timedelta = current_date - path_date # <-- Subtracting one date from another yields a timedelta object.

if (path_timedelta > timedelta(days=30) # <-- timedelta(days = 30) creates a timedelta object of 30 days; the weekday() method returns an integer for the day of the week, with Monday = 0.

and path_date.weekday() != ARCHIVE_WEEKDAY):

path.unlink()

if __name__ == '__main__':

main()The code shows how Python’s datetime and pathlib libraries can be combined to groom files by date with only a few lines of code. Because your archive files have names derived from the dates on which they were received, you can get those file paths by using the glob method, extract the stem, and use strptime to parse the stem into a datetime object. From there, you can use datetime’s timedelta objects and the weekday() method to find a file’s age and the day of the week and then remove (unlink) the files that have passed the time limit.

Take some time to consider different grooming options. How would you modify the code in listing 20.4 to keep only one file a month? How would you change it so that files from the previous month and older were groomed to save one a week? (Note: This is not the same as older than 30 days!)

Summary

- The pathlib module can greatly simplify file operations, such as finding the root and extension, moving and renaming, and matching wildcards.

- As the number and complexity of files increases, automated archiving solutions are vital, and Python offers several easy ways to create them.

- Moving data to specific directories is as easy as renaming the files and may make managing them easier.

- You can dramatically save storage space by writing to compressed archives, such as zip files.

- As the amount of data grows, it is often useful to groom data files by deleting files that have a certain age.

21 Processing data files

This chapter covers

- Using extract-transform-load

- Reading text data files (plain text and CSV)

- Reading spreadsheet files

- Normalizing, cleaning, and sorting data

- Writing data files

Much of the data available is contained in text files. This data can range from unstructured text, such as a corpus of tweets or literary texts, to more structured data in which each row is a record and the fields are delimited by a special character, such as a comma, a tab, or a pipe (|). Text files can be huge; a dataset can be spread over tens or even hundreds of files, and the data in it can be incomplete or horribly dirty. With all the variations, it’s almost inevitable that you’ll need to read and use data from text files. This chapter gives you strategies for using Python to do exactly that.

21.1 Welcome to ETL

The need to get data out of files, parse it, turn it into a useful format, and then do something with it has been around for as long as there have been data files. In fact, there is a standard term for the process: extract-transform-load (ETL). The extraction refers to the process of reading a data source and parsing it, if necessary. The transformation can be cleaning and normalizing the data, as well as combining, breaking up, or reorganizing the records it contains. The loading refers to storing the transformed data in a new place, either a different file or a database. This chapter deals with the basics of ETL in Python, starting with text-based data files and storing the transformed data in other files. I look at more structured data files in chapter 23 and storage in databases in chapter 24.

21.2 Reading text files

The first part of ETL—the “extract” portion—involves opening a file and reading its contents. This process seems like a simple one, but even at this point there can be problems, such as the file’s size. If a file is too large to fit into memory and be manipulated, you need to structure your code to handle smaller segments of the file, possibly operating one line at a time.

21.2.1 Text encoding: ASCII, Unicode, and others

Another possible pitfall is in the encoding. This chapter deals with text files, and in fact, much of the data exchanged in the real world is in text files. But the exact nature of text can vary from application to application, from person to person, and of course from country to country.

Sometimes, “text” means something in ASCII encoding, which has 128 characters, only 95 of which are printable. The good news about ASCII encoding is that it’s the lowest common denominator of most data exchange. The bad news is that it doesn’t begin to handle the complexities of the many alphabets and writing systems of the world. Reading files using ASCII encoding is almost certain to cause trouble and throw errors on character values that it doesn’t understand, whether it’s a German ü, a Portuguese ç, or something from almost any language other than English.

These errors arise because ASCII is based on 7-bit values, whereas the bytes in a typical file are 8 bits, allowing 256 possible values as opposed to the 128 of a 7-bit value. It’s routine to use those additional values to store additional characters—anything from extra punctuation (such as the printer’s en dash and em dash) to symbols (such as the trademark, copyright, and degree symbols) to accented versions of alphabetical characters. The problem has always been that if, in reading a text file, you encounter a character in the 128 values outside the ASCII range, you have no way of knowing for sure how it was encoded. Is the character value of 214, for example, a division symbol? an Ö? or something else? Short of having the code that created the file, you have no way of knowing.

Unicode and UTF-8

One way to mitigate this confusion is Unicode. The Unicode encoding called UTF-8 accepts the basic ASCII characters without any change but also allows an almost unlimited set of other characters and symbols according to the Unicode standard. Because of its flexibility, UTF-8 was used in 98% of web pages served at the time of writing, which means that your best bet for reading text files is to assume UTF-8 encoding. If the files contain only ASCII characters, they’ll still be read correctly, but you’ll also be covered if other characters are encoded in UTF-8. The good news is that the Python 3 string data type was designed to handle Unicode by default.

Even with Unicode, there’ll be occasions when your text contains values that can’t be successfully encoded. Fortunately, the open function in Python accepts an optional errors parameter that tells it how to deal with encoding errors when reading or writing files. The default option is ‘strict’, which causes an error to be raised whenever an encoding error is encountered. Other useful options are ‘ignore’, which causes the character causing the error to be skipped; ‘replace’, which causes the character to be replaced by a marker character (often, ?); ‘backslashreplace’, which replaces the character with a backslash escape sequence; and ‘surrogateescape’, which translates the offending character to a private Unicode code point on reading and back to the original sequence of bytes on writing. Your particular use case will determine how strict you need to be in handling or resolving encoding problems.

Consider a short example of a file containing an invalid UTF-8 character and see how the different options handle that character. First, write the file, using bytes and binary mode:

open('test.txt', 'wb').write(bytes([65, 66, 67, 255, 192,193]))This code results in a file that contains “ABC” followed by three non-ASCII characters, which may be rendered differently depending on the encoding used. If you use an editor like Vim to look at the file, you might see

ABCÿÀÁNow that you have the file, try reading it with the default ‘strict’ errors option:

x = open('test.txt').read()---------------------------------------------------------------------------

UnicodeDecodeError Traceback (most recent call last)

<ipython-input-2-2cb3105c1e5f> in <cell line: 1>()

----> 1 x = open('test.txt').read()

/usr/lib/python3.10/codecs.py in decode(self, input, final)

320 # decode input (taking the buffer into account)

321 data = self.buffer + input

--> 322 (result, consumed) = self._buffer_decode(data, self.errors,

final)

323 # keep undecoded input until the next call

324 self.buffer = data[consumed:]

UnicodeDecodeError: 'utf-8' codec can't decode byte 0xff in position 3: invalid start byteThe fourth byte, which had a value of 255, isn’t a valid UTF-8 character in that position, so the ‘strict’ errors setting raises an exception. Now see how the other error options handle the same file, keeping in mind that the last three characters raise an error:

open('test.txt', errors='ignore').read()

#> 'ABC'

open('test.txt', errors='replace').read()

#> 'ABC ' # <-- Replaced error characters with chr(65533)

open('test.txt', errors='surrogateescape').read()

#> 'ABC\udcff\udcc0\udcc1'

open('test.txt', errors='backslashreplace').read()

#> 'ABC\\xff\\xc0\\xc1'If you want any problem characters to disappear, ‘ignore’ is the option to use. The ‘replace’ option only marks the place occupied by the invalid character, and the other options in different ways attempt to preserve the invalid characters without interpretation.

21.2.2 Unstructured text

Unstructured text files are the easiest sort of data to read but the hardest to extract information from. Processing unstructured text can vary enormously, depending on both the nature of the text and what you want to do with it, so any comprehensive discussion of text processing is beyond the scope of this book. A short example, however, can illustrate some of the basic topics and set the stage for a discussion of structured text data files.

One of the simplest concerns is deciding what forms a basic logical unit in the file. If you have a corpus of thousands of tweets, the text of Moby Dick, or a collection of news stories, you need to be able to break them up into cohesive units. In the case of tweets, each may fit onto a single line, and you can read and process each line of the file fairly simply.

In the case of Moby Dick or even a news story, the problem can be trickier. You may not want to treat all of a novel or news item as a single item, in many cases. But if that’s the case, you need to decide what sort of unit you do want and then come up with a strategy to divide the file accordingly. Perhaps you want to consider the text paragraph by paragraph. In that case, you need to identify how paragraphs are separated in your file and create your code accordingly. If a paragraph is the same as a line in the text file, the job is easy. Often, however, the line breaks in a text file are shorter, and you need to do a bit more work.

Now look at a couple of examples:

Call me Ishmael. Some years ago--never mind how long precisely--

having little or no money in my purse, and nothing particular

to interest me on shore, I thought I would sail about a little

and see the watery part of the world. It is a way I have

of driving off the spleen and regulating the circulation.

Whenever I find myself growing grim about the mouth;

whenever it is a damp, drizzly November in my soul; whenever I

find myself involuntarily pausing before coffin warehouses,

and bringing up the rear of every funeral I meet;

and especially whenever my hypos get such an upper hand of me,

that it requires a strong moral principle to prevent me from

deliberately stepping into the street, and methodically knocking

people's hats off--then, I account it high time to get to sea

as soon as I can. This is my substitute for pistol and ball.

With a philosophical flourish Cato throws himself upon his sword;

I quietly take to the ship. There is nothing surprising in this.

If they but knew it, almost all men in their degree, some time

or other, cherish very nearly the same feelings towards

the ocean with me.

There now is your insular city of the Manhattoes, belted round by wharves

as Indian isles by coral reefs--commerce surrounds it with her surf.

Right and left, the streets take you waterward. Its extreme downtown

is the battery, where that noble mole is washed by waves, and cooled

by breezes, which a few hours previous were out of sight of land.

Look at the crowds of water-gazers there.In the sample, which is indeed the beginning of Moby Dick, the lines are broken more or less as they might be on the page, and paragraphs are indicated by a single blank line. If you want to deal with each paragraph as a unit, you need to break the text on the blank lines. Fortunately, this task is easy if you use the string split() method. Each newline character in a string can represented by “”. Naturally, the last line of a paragraph’s text ends with a newline, and if the next line is blank, it’s immediately followed by a second newline for the blank line:

moby_text = open("moby_01.txt").read() # <-- Reads all of file as a single string

moby_paragraphs = moby_text.split("\n\n") # <-- Splits on two newlines together

print(moby_paragraphs[1])There now is your insular city of the Manhattoes, belted round by wharves

as Indian isles by coral reefs--commerce surrounds it with her surf.

Right and left, the streets take you waterward. Its extreme downtown

is the battery, where that noble mole is washed by waves, and cooled

by breezes, which a few hours previous were out of sight of land.

Look at the crowds of water-gazers there.Splitting the text into paragraphs can be done by splitting on two newlines-moby_text.split("\n\n"). Such splitting is a very simple first step in handling unstructured text, and you might also need to do more normalization of the text before processing. Suppose that you want to count the rate of occurrence of every word in a text file. If you just split the file on whitespace, you get a list of words in the file. Counting their occurrences accurately will be hard, however, because This, this, this., and this, are not the same. The way to make this code work is to normalize the text by removing the punctuation and making everything the same case before processing. For the preceding example text, the code for a normalized list of words might look like this:

moby_text = open("moby_01.txt").read() # <-- Reads all of the file as a single string

moby_paragraphs = moby_text.split("\n\n")

moby = moby_paragraphs[1].lower() # <-- Makes everything lowercase

moby = moby.replace(".", "") # <-- Removes periods

moby = moby.replace(",", "") # <-- Removes commas

moby_words = moby.split()

print(moby_words)['there', 'now', 'is', 'your', 'insular', 'city', 'of', 'the',

'manhattoes,', 'belted', 'round', 'by', 'wharves', 'as', 'indian', 'isles'

'by', 'coral', 'reefs--commerce', 'surrounds', 'it', 'with', 'her',

'surf', 'right', 'and', 'left,', 'the', 'streets', 'take', 'you',

'waterward', 'its', 'extreme', 'downtown', 'is', 'the', 'battery,',

'where', 'that', 'noble', 'mole', 'is', 'washed', 'by', 'waves,', 'and',

'cooled', 'by', 'breezes,', 'which', 'a', 'few', 'hours', 'previous',

'were', 'out', 'of', 'sight', 'of', 'land', 'look', 'at', 'the', 'crowds',

'of', 'water-gazers', 'there']Here we use the .lower string method first and then use the .replace method twice to remove two punctuation characters. Of course, as we saw back in chapter 6, it would probably be more efficient to use the string .translate method to replace all of the punctuation in one operation.

Look closely at the list of words generated. Do you see any problems with the normalization so far? What other problems do you think you might encounter in a longer section of text? How do you think you might deal with those problems?

21.2.3 Delimited flat files

Although reading unstructured text files is easy, the downside is their very lack of structure. It’s often much more useful to have some organization in the file to help with picking out individual values. The simplest way is to break the file into lines and have one element of information per line. You may have a list of the names of files to be processed, a list of people’s names that need to be printed (on name tags, say), or maybe a series of temperature readings from a remote monitor. In such cases, the data parsing is very simple: you read in the line and convert it to the right type, if necessary. Then the file is ready to use.

Most of the time, however, things aren’t quite so simple. Usually, you need to group multiple related bits of information, and you need your code to read them in together. The common way to do this is to put the related pieces of information on the same line, separated by a special character. That way, as you read each line of the file, you can use the special characters to split the file into its different fields and put the values of those fields in variables for later processing.

The following listing shows a simple example of temperature data in delimited format.

Listing 21.1 temp_data_pipes_00a.txt

State|Month Day, Year Code|Avg Daily Max Air Temperature (F)|Record Count

➥for Daily Max Air Temp (F)

Illinois|1979/01/01|17.48|994

Illinois|1979/01/02|4.64|994

Illinois|1979/01/03|11.05|994

Illinois|1979/01/04|9.51|994

Illinois|1979/05/15|68.42|994

Illinois|1979/05/16|70.29|994

Illinois|1979/05/17|75.34|994

Illinois|1979/05/18|79.13|994

Illinois|1979/05/19|74.94|994This data is pipe delimited, meaning that each field in the line is separated by the pipe (|) character—in this case, giving you four fields: the state of the observations, the date of the observations, the average high temperature, and the number of stations reporting. Other common delimiters are the tab character and the comma. The comma is perhaps the most common, but the delimiter could be any character you don’t expect to occur in the values. (More about that topic next.) Comma delimiters are so common that this format is often called comma-separated values (CSV), and files of this type often have a .csv extension as a hint of their format.

Whatever character is being used as the delimiter, if you know what character it is, you can write your own code in Python to break each line into its fields and return them as a list. In the previous case, you can use the string split() method to break a line into a list of values:

line = "Illinois|1979/01/01|17.48|994"

print(line.split("|"))

#> ['Illinois', '1979/01/01', '17.48', '994']Note that this technique is very easy to do but leaves all the values as strings, which might not be convenient for later processing.

Write the code to read a text file (assume the file is temp_data_ pipes_00a.txt, as shown in the example), split each line of the file into a list of values, and add that list to a single list of records.

What concerns or problems did you encounter in implementing this code? How might you go about converting the last three fields to the correct date, real, and int types?

21.2.4 The csv module

If you need to do much processing of delimited data files, you should become familiar with the csv module and its options. When I’ve been asked to name my favorite module in the Python standard library, more than once I’ve cited the csv module—not because it’s glamorous (it isn’t) but because it has probably saved me more work and kept me from more self-inflicted bugs over my career than any other module.

The csv module is a perfect case of Python’s “batteries included” philosophy. Although it’s perfectly possible, and in many cases not even terribly hard, to roll your own code to read delimited files, it’s even easier and much more reliable to use the Python module. The csv module has been tested and optimized, and it has features that you probably wouldn’t bother to write if you had to do it yourself but that are truly handy and time saving when available.

Look at the previous data and decide how you’d read it by using the csv module. The code to parse the data has to do two things: read each line and strip off the trailing newline character and then break up the line on the pipe character and append that list of values to a list of lines. Your solution to the exercise might look something like the following:

results = []

for line in open("temp_data_pipes_00a.txt"):

fields = line.strip().split("|")

results.append(fields)

print(results)[['Notes'],

['State',

'Month Day, Year Code',

'Avg Daily Max Air Temperature (F)',

'Record Count for Daily Max Air Temp (F)'],

['Illinois', '1979/01/01', '17.48', '994'],

['Illinois', '1979/01/02', '4.64', '994'],

['Illinois', '1979/01/03', '11.05', '994'],

['Illinois', '1979/01/04', '9.51', '994'],

['Illinois', '1979/05/15', '68.42', '994'],

['Illinois', '1979/05/16', '70.29', '994'],

['Illinois', '1979/05/17', '75.34', '994'],

['Illinois', '1979/05/18', '79.13', '994'],

['Illinois', '1979/05/19', '74.94', '994']]To do the same thing with the csv module, the code might be something like

import csv

results = [fields for fields in csv.reader(open("temp_data_pipes_00a.txt",

newline=''), delimiter="|")]

results[['State', 'Month Day, Year Code', 'Avg Daily Max Air Temperature (F)',

'Record Count for Daily Max Air Temp (F)'], ['Illinois', '1979/01/01',

'17.48', '994'], ['Illinois', '1979/01/02', '4.64', '994'], ['Illinois',

'1979/01/03', '11.05', '994'], ['Illinois', '1979/01/04', '9.51', '994'],

['Illinois', '1979/05/15', '68.42', '994'], ['Illinois', '1979/05/16',

'70.29', '994'], ['Illinois', '1979/05/17', '75.34', '994'], ['Illinois',

'1979/05/18', '79.13', '994'], ['Illinois', '1979/05/19', '74.94', '994']]In this simple case, the gain over rolling your own code doesn’t seem so great. Still, the code is two lines shorter and a bit clearer, and there’s no need to worry about stripping off newline characters. The real advantages come when you want to deal with more challenging cases.

The data in the example is real, but it’s actually been simplified and cleaned. The real data from the source is more complex. The real data has more fields, some fields are in quotes while others are not, and the first field is empty. The original is tab-delimited, but for the sake of illustration, I present it as comma delimited here.

Listing 21.2 temp_data_01.csv

"Notes","State","State Code","Month Day, Year","Month Day, Year Code",Avg

Daily Max Air Temperature (F),Record Count for Daily Max Air Temp (F),Min

Temp for Daily Max Air Temp (F),Max Temp for Daily Max Air Temp (F),Avg

Daily Max Heat Index (F),Record Count for Daily Max Heat Index (F),Min for

Daily Max Heat Index (F),Max for Daily Max Heat Index (F),Daily Max Heat

Index (F) % Coverage

,"Illinois","17","Jan 01,

1979","1979/01/01",17.48,994,6.00,30.50,Missing,0,Missing,Missing,0.00%

,"Illinois","17","Jan 02,

1979","1979/01/02",4.64,994,-6.40,15.80,Missing,0,Missing,Missing,0.00%

,"Illinois","17","Jan 03,

1979","1979/01/03",11.05,994,-0.70,24.70,Missing,0,Missing,Missing,0.00%

,"Illinois","17","Jan 04,

1979","1979/01/04",9.51,994,0.20,27.60,Missing,0,Missing,Missing,0.00%

,"Illinois","17","May 15,

1979","1979/05/15",68.42,994,61.00,75.10,Missing,0,Missing,Missing,0.00%

,"Illinois","17","May 16,

1979","1979/05/16",70.29,994,63.40,73.50,Missing,0,Missing,Missing,0.00%

,"Illinois","17","May 17, 1979","1979/05/17",75.34,994,64.00,80.50,82.60,2,82

.40,82.80,0.20%

,"Illinois","17","May 18, 1979","1979/05/18",79.13,994,75.50,82.10,81.42,349,

80.20,83.40,35.11%

,"Illinois","17","May 19, 1979","1979/05/19",74.94,994,66.90,83.10,82.87,78,8

1.60,85.20,7.85%Notice that some fields include commas. The convention in that case is to put quotes around a field to indicate that it’s not supposed to be parsed for delimiters. It’s quite common, as here, to quote only some fields, especially those in which a value might contain the delimiter character. It also happens, as here, that some fields are quoted even if they’re not likely to contain the delimiting character.

In a case like this one, your homegrown code becomes cumbersome. Now you can no longer split the line on the delimiting character; you need to be sure that you look only at delimiters that aren’t inside quoted strings. Also, you need to remove the quotes around quoted strings, which might occur in any position or not at all. With the csv module, you don’t need to change your code at all. In fact, because the comma is the default delimiter, you don’t even need to specify it:

results2 = [fields for fields in csv.reader(open("temp_data_01.csv",

newline=''))]

results2[['Notes', 'State', 'State Code', 'Month Day, Year', 'Month Day, Year

Code', 'Avg Daily Max Air Temperature (F)', 'Record Count for Daily Max Air

Temp (F)', 'Min Temp for Daily Max Air Temp (F)', 'Max Temp for Daily Max

Air Temp (F)', 'Avg Daily Max Heat Index (F)', 'Record Count for Daily Max

Heat Index (F)', 'Min for Daily Max Heat Index (F)', 'Max for Daily Max

Heat Index (F)', 'Daily Max Heat Index (F) % Coverage'], [], ['',

'Illinois', '17', 'Jan 01, 1979', '1979/01/01', '17.48', '994', '6.00',

'30.50', 'Missing', '0', 'Missing', 'Missing', '0.00%'], ['', 'Illinois',

'17', 'Jan 02, 1979', '1979/01/02', '4.64', '994', '-6.40', '15.80',

'Missing', '0', 'Missing', 'Missing', '0.00%'], ['', 'Illinois', '17', 'Jan

03, 1979', '1979/01/03', '11.05', '994', '-0.70', '24.70', 'Missing', '0',

'Missing', 'Missing', '0.00%'], ['', 'Illinois', '17', 'Jan 04, 1979',

'1979/01/04', '9.51', '994', '0.20', '27.60', 'Missing', '0', 'Missing',

'Missing', '0.00%'], ['', 'Illinois', '17', 'May 15, 1979', '1979/05/15',

'68.42', '994', '61.00', '75.10', 'Missing', '0', 'Missing', 'Missing',

'0.00%'], ['', 'Illinois', '17', 'May 16, 1979', '1979/05/16', '70.29',

'994', '63.40', '73.50', 'Missing', '0', 'Missing', 'Missing', '0.00%'],

['', 'Illinois', '17', 'May 17, 1979', '1979/05/17', '75.34', '994',

'64.00', '80.50', '82.60', '2', '82.40', '82.80', '0.20%'], ['',

'Illinois', '17', 'May 18, 1979', '1979/05/18', '79.13', '994', '75.50',

'82.10', '81.42', '349', '80.20', '83.40', '35.11%'], ['', 'Illinois',

'17', 'May 19, 1979', '1979/05/19', '74.94', '994', '66.90', '83.10',

\'82.87', '78', '81.60', '85.20', '7.85%']]Notice that the extra quotes have been removed and that any field values with commas have the commas intact inside the fields—all without any more characters in the command.

Consider how you’d approach the problems of handling quoted fields and embedded delimiter characters if you didn’t have the csv library. Which would be easier to handle: the quoting or the embedded delimiters?

21.2.5 Reading a csv file as a list of dictionaries

In the preceding examples, you got a row of data back as a list of fields. This result works fine in many cases, but sometimes it may be handy to get the rows back as dictionaries where the field name is the key. For this use case, the csv library has a DictReader, which can take a list of fields as a parameter or can read them from the first line of the data. If you want to open the data with a DictReader, the code would look like this:

results = [fields for fields in csv.DictReader(open("temp_data_01.csv",

newline=''))]

results[0]{'Notes': '',

'State': 'Illinois',

'State Code': '17',

'Month Day, Year': 'Jan 01, 1979',

'Month Day, Year Code': '1979/01/01',

'Avg Daily Max Air Temperature (F)': '17.48',

'Record Count for Daily Max Air Temp (F)': '994',

'Min Temp for Daily Max Air Temp (F)': '6.00',

'Max Temp for Daily Max Air Temp (F)': '30.50',

'Avg Daily Max Heat Index (F)': 'Missing',

'Record Count for Daily Max Heat Index (F)': '0',

'Min for Daily Max Heat Index (F)': 'Missing',

'Max for Daily Max Heat Index (F)': 'Missing',

'Daily Max Heat Index (F) % Coverage': '0.00%'}Note that, with a dictionary, the fields stay in their original order:

results[0]['State']'Illinois'If the data is particularly complex, and specific fields need to be manipulated, a DictReader can make it much easier to be sure you’re getting the right field; it also makes your code somewhat easier to understand. Conversely, if your dataset is quite large, you need to keep in mind that DictReader can take on the order of twice as long to read the same amount of data.

21.3 Excel files

The other common file format that I discuss in this chapter is the Excel file, which is the format that Microsoft Excel uses to store spreadsheets. I include Excel files here because the way you end up treating them is very similar to the way you treat delimited files. In fact, because Excel can both read and write CSV files, the quickest and easiest way to extract data from an Excel spreadsheet file often is to open it in Excel and then save it as a CSV file. This procedure doesn’t always make sense, however, particularly if you have a lot of files. In that case, even though you could theoretically automate the process of opening and saving each file in CSV format, it’s probably faster to deal with the Excel files directly.

It’s beyond the scope of this book to have an in-depth discussion of spreadsheet files, with their options for multiple sheets in the same file, macros, and various formatting options. Instead, in this section, I look at an example of reading a simple one-sheet file simply to extract the data from it.

As it happens, Python’s standard library doesn’t have a module to read or write Excel files. To read that format, you need to install an external module. Fortunately, several modules are available to do the job. For this example, you use one called openpyxl, which is available from the Python package repository. You can install it with the following command from a command line:

pip install openpyxlFigure 21.1 is a view of the previous temperature data, but in a spreadsheet.

Reading the file is fairly simple, but it’s still more work than CSV files require. First, you need to load the workbook; next, you need to get the specific sheet; then, you can iterate over the rows; and from there, you extract the values of the cells. Some sample code to read the spreadsheet looks like the following:

from openpyxl import load_workbook

wb = load_workbook('temp_data_01.xlsx')

results = []

ws = wb.worksheets[0]

for row in ws.iter_rows():

results.append([cell.value for cell in row])

print(results)[['Notes', 'State', 'State Code', 'Month Day, Year', 'Month Day, Year

Code', 'Avg Daily Max Air Temperature (F)', 'Record Count for Daily Max Air

Temp (F)', 'Min Temp for Daily Max Air Temp (F)', 'Max Temp for Daily Max

Air Temp (F)', 'Avg Daily Max Heat Index (F)', 'Record Count for Daily Max

Heat Index (F)', 'Min for Daily Max Heat Index (F)', 'Max for Daily Max

Heat Index (F)', 'Daily Max Heat Index (F) % Coverage'], [None, 'Illinois',

17, 'Jan 01, 1979', '1979/01/01', 17.48, 994, 6, 30.5, 'Missing', 0,

'Missing', 'Missing', '0.00%'], [None, 'Illinois', 17, 'Jan 02, 1979',

'1979/01/02', 4.64, 994, -6.4, 15.8, 'Missing', 0, 'Missing', 'Missing',

'0.00%'], [None, 'Illinois', 17, 'Jan 03, 1979', '1979/01/03', 11.05, 994,

-0.7, 24.7, 'Missing', 0, 'Missing', 'Missing', '0.00%'], [None,

'Illinois', 17, 'Jan 04, 1979', '1979/01/04', 9.51, 994, 0.2, 27.6,

'Missing', 0, 'Missing', 'Missing', '0.00%'], [None, 'Illinois', 17, 'May

15, 1979', '1979/05/15', 68.42, 994, 61, 75.1, 'Missing', 0, 'Missing',

'Missing', '0.00%'], [None, 'Illinois', 17, 'May 16, 1979', '1979/05/16',

70.29, 994, 63.4, 73.5, 'Missing', 0, 'Missing', 'Missing', '0.00%'],

[None, 'Illinois', 17, 'May 17, 1979', '1979/05/17', 75.34, 994, 64,

80.5,82.6, 2, 82.4, 82.8, '0.20%'], [None, 'Illinois', 17, 'May 18, 1979',

'1979/05/18', 79.13, 994, 75.5, 82.1, 81.42, 349, 80.2, 83.4,

'35.11%'],[None, 'Illinois', 17, 'May 19, 1979', '1979/05/19', 74.94,

994,66.9, 83.1, 82.87, 78, 81.6, 85.2, '7.85%']]This code gets you the same results as the much simpler code did for a CSV file. It’s not surprising that the code to read a spreadsheet is more complex, because spreadsheets are themselves much more complex objects. You should also be sure that you understand the way that data has been stored in the spreadsheet. If the spreadsheet contains formatting that has some significance, if labels need to be disregarded or handled differently, or if formulas and references need to be processed, you need to dig deeper into how those elements should be processed, and you need to write more complex code.

Spreadsheets also often have other possible problems. As of this writing, it’s common for spreadsheets to be limited to around a million rows. Although that limit sounds large, more and more often you’ll need to handle datasets that are larger. Also, spreadsheets sometimes automatically apply inconvenient formatting. One company I worked for had part numbers that consisted of a digit and at least one letter followed by some combination of digits and letters. It was possible to get a part number such as 1E20. Most spreadsheets automatically interpret 1E20 as scientific notation and save it as 1.00E + 20 (1 × 10 to the 20th power) while leaving 1F20 as a string. For some reason, it’s rather difficult to keep this from happening, and particularly with a large dataset, the problem won’t be detected until farther down the pipeline, if all. For these reasons, I recommend using CSV or delimited files when at all possible. Users usually can save a spreadsheet as CSV, so there’s no need to put up with the extra complexity and formatting hassles that spreadsheets involve.

21.4 Data cleaning

One common problem you’ll encounter in processing text-based data files is dirty data. By dirty, I mean that there are all sorts of surprises in the data, such as null values, values that aren’t legal for your encoding, or extra whitespace. The data may also be unsorted or in an order that makes processing difficult. The process of dealing with situations like these is called data cleaning.

21.4.1 Cleaning

In a very simple example, you might need to process a file that was exported from a spreadsheet or other financial program, and the columns dealing with money may have percentage and currency symbols (such as %, $, £, and €), as well as extra groupings that use a period or comma. Data from other sources may have other surprises that make processing tricky if they’re not caught in advance. Look again at the temperature data you saw previously. The first data line looks like this:

[None, 'Illinois', 17, 'Jan 01, 1979', '1979/01/01', 17.48, 994, 6, 30.5,

2.89, 994, -13.6, 15.8, 'Missing', 0, 'Missing', 'Missing', '0.00%']Some columns, such as ‘State’ (field 2) and ‘Notes’ (field 1), are clearly text, and you wouldn’t be likely to do much with them. There are also two date fields in different formats, and you might well want to do calculations with the dates, possibly to change the order of the data and to group rows by month or day or possibly to calculate how far apart in time two rows are.

The rest of the fields seem to be different types of numbers; the temperatures are decimals, and the record counts columns are integers. Notice, however, that the heat index temperatures have a variation: when the value for the ‘Max Temp for Daily Max Air Temp (F)’ field is below 80, the values for the heat index fields aren’t reported but instead are listed as ‘Missing’, and the record count is 0. Also note that the ‘Daily Max Heat Index (F) % Coverage’ field is expressed as a percentage of the number of temperature records that also qualify to have a heat index. Both of these things will be problematic if you want to do any math calculations on the values in those fields, because both ‘Missing’ and any number ending with % will be parsed as strings, not numbers.

Cleaning data like this can be done at different steps in the process. Quite often, I prefer to clean the data as it’s being read from the file, so I might well replace the ‘Missing’ with a None value or an empty string as the lines are being processed. You could also leave the ‘Missing’ strings in place and write your code so that no math operations are performed on a value if it is ‘Missing’.

How would you handle the fields with ‘Missing’ as possible values for math calculations? Can you write a snippet of code that averages one of those columns?

What would you do with the Daily Max Heat Index (F) % Coverage column at the end so that you could also report the average coverage? In your opinion, would the solution to this problem be at all linked to the way that the ‘Missing’ entries were handled?

21.4.2 Sorting

As I mentioned earlier, it’s often useful to have data in the text file sorted before processing. Sorting the data makes it easier to spot and handle duplicate values, and it can also help bring together related rows for quicker or easier processing. In one case, I received a 20 million–row file of attributes and values, in which arbitrary numbers of them needed to be matched with items from a master SKU list. Sorting the rows by the item ID made gathering each item’s attributes much faster. How you do the sorting depends on the size of the data file relative to your available memory and on the complexity of the sort. If all the lines of the file can fit comfortably into available memory, the easiest thing may be to read all of the lines into a list and use the list’s sort method. For example, if datafile contained the following lines:

ZZZZZZ

CCCCCC

QQQQQQ

AAAAAAthen we could sort them this way:

lines = open("datafile").readlines()

lines.sort()

print(lines)['AAAAAA\n', 'CCCCCC\n', 'QQQQQQ\n', 'ZZZZZZ\n']You could also use the sorted() function, as in sorted_lines = sorted(lines). This function preserves the order of the lines in your original list, which usually is unnecessary. The drawback to using the sorted() function is that it creates a new copy of the list. This process takes slightly longer and consumes twice as much memory, which might be a bigger concern.

If the dataset is larger than memory and the sort is very simple (just by an easily grabbed field), it may be easier to use an external utility, such as the UNIX sort command, to preprocess the data:

! sort datafile > datafile.srt

! cat datafile.srtAAAAAA

CCCCCC

QQQQQQ

ZZZZZZIn either case, sorting can be done in reverse order and can be keyed by values, not the beginning of the line. For such occasions, you need to study the documentation of the sorting tool you choose to use. A simple example in Python would be to make a sort of lines of text case insensitive. To do this, you give the sort method a key function that makes the element lowercase before making a comparison:

lines.sort(key=str.lower)This example uses a lambda function to ignore the first five characters of each string:

lines.sort(key=lambda x: x[5:])Using key functions to determine the behavior of sorts in Python is very handy, but be aware that the key function is called a lot in the process of sorting, so a complex key function could mean a real performance slowdown, particularly with a large dataset.

21.4.3 Data cleaning problems and pitfalls

It seems that there are as many types of dirty data as there are sources and use cases for that data. Your data will always have quirks that do everything from making processing less accurate to making it impossible to even load the data. As a result, I can’t provide an exhaustive list of the problems you might encounter and how to deal with them, but I can give you some general hints:

- Beware of whitespace and null characters. The problem with whitespace characters is that you can’t see them, but that doesn’t mean that they can’t cause trouble. Extra whitespace at the beginning and end of data lines, extra whitespace around individual fields, and tabs instead of spaces (or vice versa) can all make your data loading and processing more troublesome, and these problems aren’t always easily apparent. Similarly, text files with null characters (ASCII 0) may seem okay on inspection but break on loading and processing.

- Beware of punctuation. Punctuation can also be a problem. Extra commas or periods can mess up CSV files and the processing of numeric fields, and unescaped or unmatched quote characters can also confuse things.

- Break down and debug the steps. It’s easier to debug a problem if each step is separate, which means putting each operation on a separate line, being more verbose, and using more variables. But the work is worth it. For one thing, it makes any exceptions that are raised easier to understand, and it also makes debugging easier, whether with print statements, logging, or the Python debugger. It may also be helpful to save the data after each step and to cut the file size to just a few lines that cause error.

21.5 Writing data files

The last part of the ETL process may involve saving the transformed data to a database (which I discuss in chapter 22), but often it involves writing the data to files. These files may be used as input for other applications and analysis, either by people or by other applications. Usually, you have a particular file specification listing what fields of data should be included, what they should be named, what format and constraints there should be for each, and so on.

21.5.1 CSV and other delimited files

Probably the easiest thing of all is to write your data to CSV files. Because you’ve already loaded, parsed, cleaned, and transformed the data, you’re unlikely to hit any unresolved problems with the data itself. And again, using the csv module from the Python standard library makes your work much easier.

Writing delimited files with the csv module is pretty much the reverse of the read process. Again, you need to specify the delimiter that you want to use, and again, the csv module takes care of any situations in which your delimiting character is included in a field:

temperature_data = [['State', 'Month Day, Year Code', 'Avg Daily Max Air

Temperature (F)', 'Record Count for Daily Max Air Temp (F)'], ['Illinois',

'1979/01/01', '17.48', '994'], ['Illinois', '1979/01/02', '4.64', '994'],

['Illinois', '1979/01/03', '11.05', '994'], ['Illinois', '1979/01/04',

'9.51', '994'], ['Illinois', '1979/05/15', '68.42', '994'], ['Illinois',

'1979/05/16', '70.29', '994'], ['Illinois', '1979/05/17', '75.34', '994'],

['Illinois', '1979/05/18', '79.13', '994'], ['Illinois', '1979/05/19',

'74.94', '994']]

csv.writer(open("temp_data_03.csv", "w",

newline='')).writerows(temperature_data)This code results in the following file:

State,"Month Day, Year Code",Avg Daily Max Air Temperature (F),Record Coun

➥ for Daily Max Air Temp (F)

Illinois,1979/01/01,17.48,994

Illinois,1979/01/02,4.64,994

Illinois,1979/01/03,11.05,994

Illinois,1979/01/04,9.51,994

Illinois,1979/05/15,68.42,994

Illinois,1979/05/16,70.29,994

Illinois,1979/05/17,75.34,994

Illinois,1979/05/18,79.13,994

Illinois,1979/05/19,74.94,994Just as when reading from a CSV file, it’s possible to write dictionaries instead of lists if you use a DictWriter. If you do use a DictWriter, be aware of a couple of points: you must specify the field names in a list when you create the writer, and you can use the DictWriter’s writeheader method to write the header at the top of the file. So assume that you have the same data as previously but in dictionary format:

data = [{'State': 'Illinois',

'Month Day, Year Code': '1979/01/01',

'Avg Daily Max Air Temperature (F)': '17.48',

'Record Count for Daily Max Air Temp (F)': '994'}]

fields = ['State', 'Month Day, Year Code',

'Avg Daily Max Air Temperature (F)',

'Record Count for Daily Max Air Temp (F)']You can use a DictWriter object from the csv module to write each row, a dictionary, to the correct fields in the CSV file:

fields = ['State', 'Month Day, Year Code',

'Avg Daily Max Air Temperature (F)',

'Record Count for Daily Max Air Temp (F)']

dict_writer = csv.DictWriter(open("temp_data_04.csv", "w"),

fieldnames=fields)

dict_writer.writeheader()

dict_writer.writerows(data)

del dict_writer21.5.2 Writing Excel files

Writing spreadsheet files is unsurprisingly similar to reading them. You need to create a workbook, or spreadsheet file; then, you need to create a sheet or sheets; and finally, you write the data in the appropriate cells. You could create a new spreadsheet from your CSV data file like this:

from openpyxl import Workbook

data_rows = [fields for fields in csv.reader(open("temp_data_01.csv"))]

wb = Workbook()

ws = wb.active

ws.title = "temperature data"

for row in data_rows:

ws.append(row)

wb.save("temp_data_02.xlsx")It’s also possible to add formatting to cells as you write them to the spreadsheet file. For more on how to add formatting, please refer to the xlswriter documentation.

21.5.3 Packaging data files

If you have several related data files, or if your files are large, it may make sense to package them in a compressed archive. Although various archive formats are in use, the zip file remains popular and almost universally accessible to users on almost every platform. For hints on how to create zipfile packages of your data files, please refer to chapter 20.

21.6 Weather observations

The file of weather observations provided here (Illinois_weather_1979-2011.txt) is by month and then by county for the state of Illinois from 1979 to 2011. Write the code to process this file to extract the data for Chicago (Cook County) into a single CSV or spreadsheet file. This process includes replacing the ‘Missing’ strings with empty strings and translating the percentage to a decimal. You may also consider what fields are repetitive (and therefore can be omitted or stored elsewhere). The proof that you’ve got it right occurs when you load the file into a spreadsheet, or you can also use Colaboratory’s file browser (the file icon at the very left) and double-click on it to see the data as a table.

There is some documentation at the end of the file, so you will need to stop processing the file when the first field of the line is “—”.

For reference, it’s always a good idea to look at a few lines (at least) of the raw file:

['"Notes"\t"Month"\t"Month Code"\t"County"\t"County Code"\tAvg Daily Max

Air Temperature (F)\tRecord Count for Daily Max Air Temp (F)\tMin Temp for

Daily Max Air Temp (F)\tMax Temp for Daily Max Air Temp (F)\tAvg Daily Min

Air Temperature (F)\tRecord Count for Daily Min Air Temp (F)\tMin Temp for

Daily Min Air Temp (F)\tMax Temp for Daily Min Air Temp (F)\tAvg Daily Max

Heat Index (F)\tRecord Count for Daily Max Heat Index (F)\tMin for Daily

Max Heat Index (F)\tMax for Daily Max Heat Index (F)\tDaily Max Heat Index

(F) % Coverage\n',

'\t"Jan"\t"1"\t"Adams County, IL"\t"17001"\t31.89\t19437\t

10.00\t68.90\t18.01\t19437\t-26.20\t50.30\tMissing\t0\tMissing\tMissing\

t0.00%\n',

'\t"Jan"\t"1"\t"Alexander County,

IL"\t"17003"\t41.07\t6138\t2.60\t73.20\t26.48\t6138\t

14.00\t60.30\tMissing\t0\tMissing\tMissing\t0.00%\n',

'\t"Jan"\t"1"\t"Bond County, IL"\t"17005"\t35.71\t6138\t-

2.70\t69.50\t22.18\t6138\t-17.90\t57.20\tMissing\t0\tMissing\tMissing\

t0.00%\n']21.6.1 Solving the problem with AI-generated code

This problem is mostly procedural, with little higher-level design, so it’s fairly suitable for an AI solution. As usual, we also need to be sure to include all of the necessary information clearly in the prompt.

21.6.2 Solutions and discussion

This is a much easier problem than those in some of the previous chapters, since we are really just doing some basic data cleaning. The main things to do are load the file, fix/discard some of the data fields, and then write the file as a CSV file.

The human solution

This solution is pretty simple: the input and output files are opened and the raw fields are read in, processed, and saved:

import csv

with (

open("Illinois_weather_1979-2011.txt") as infile,

open("chicago_weather_1979-2011.csv", "w") as outfile,

): # <-- Opens both input and output files

for row in csv.reader(infile, delimiter="\t"):

if row[0] == "---": # <-- Checks for beginning of documentation section

break

if "Cook" in row[3]:

del row[0:2] # <-- Removes first two fields of line

row = [

item.replace("Missing", "") for item in row

] # <-- Replaces “Missing” with an empty string

row[-1] = row[-1].replace(

"%", ""

) # <-- Removes % character from the last field

row[-1] = (

float(row[-1]) / 100 if str.isdigit(row[-1]) else row[-1]

) # <-- Convert percentage to a decimal value

csv.writer(outfile).writerow(row)As mentioned, the last few lines of this file are documentation, so we end processing when we hit the separator “—”. Since we are looking for Chicago (Cook County) data, we will only process a line if the “County” field contains “Cook”. Alternatively, we could look in the file and see that the county code for Cook County is “17031” and only process lines where that is in the “County Code” field. As we process the lines, the first thing we do is remove the first two fields—the first field (“Notes”) is empty, and second is the month name, which we don’t need since we have the month’s number in the following field.

The next two operations are changing “Missing” to an empty string in any field and then removing the % character and converting a text string of the percentage to a decimal number (e.g., “10” becomes 0,1).

The code finishes by writing the newly processed fields to the CVS file.

The AI solutions

Copilot was given the following prompt, which was based closely on the lab assignment:

The file of weather observations provided here (Illinois_weather_1979 2011.txt) is by month and then by county for the state of Illinois from 1979 to 2011. Write the code to process this file to extract the data for Chicago (Cook County) into a single CSV or spreadsheet file. This process includes replacing the ‘Missing’ strings with empty strings and translating the percentage to a decimal. You may also consider what fields are repetitive (and therefore can be omitted or stored elsewhere). Note: there is some documentation at the end of the file, so you will need to stop processing the file when the first field of the line is “—”.

Copilot offered the following plan, which makes sense:

- 1 Open the file Illinois_weather_1979-2011.txt for reading.

- 2 Create a new CSV file for writing the processed data.

- 3 Iterate through each line of the input file:

- a If the line starts with “—”, stop processing.

- b If the line contains data for Cook County (Chicago), process it:

- Replace “Missing” with an empty string.

- Convert percentage values to decimals.

- Write the processed data to the CSV file.

- 4 Close both files.

As expected, Copilot did fairly well with this task, offering a solution that almost does the job. It generated this code:

import csv

input_file = "Illinois_weather_1979-2011.txt"

output_file = "Chicago_weather_1979-2011.csv"

with open(input_file, "r") as infile, open(output_file, "w", newline="") as outfile:

reader = csv.reader(infile, delimiter="\t")

writer = csv.writer(outfile)

headers = next(reader)

writer.writerow(headers) # Write headers to the output file

for row in reader:

if row[0].startswith("---"):

break

if "Cook" in row[3]: # Assuming 'Cook' indicates Cook County (Chicago)

processed_row = [field.replace("Missing", "") for field in row]

processed_row = [

str(float(field.strip("%")) / 100) if "%" in field else field

for field in processed_row

]

writer.writerow(processed_row)This code is quite similar to the human version, with only a few differences. First, it handles headers nicely, copying them to the new file—something that the human version did not do. Second, it does the conversion of the percentages to decimals in one line, rather than two, and checks all fields rather than only the last one. Both of those differences are reasonable—even positive. Finally, all of the fields, even the empty “Notes” field, are preserved. This isn’t surprising, since removing/consolidating fields would be a value judgment.

The only problem is that when this code is run, the resulting file, Chicago_ weather_1979-2011.csv, only has the header. That’s because it checks for “Cook” in the entire row:

if 'Cook' in row:For that condition to be true, an entire field in the row would have to be exactly “Cook,” when in fact the rows we want contain a field “Cook County, IL”. The fix for this is to just specify that only row[3] contain “Cook”:

if 'Cook' in row[3]:With that one change, Copilot’s version works perfectly. Here again, we could spend a lot of time experimenting with the prompt to get a complete answer, but the efficient choice is to just make the three-character fix.

The prompt for Colaboratory was a bit different:

The file provided here (Illinois_weather_1979-2011.txt) is for the state of Illinois from 1979 to 2011. Write code to process this file to extract the data for Chicago (Cook County) into a single CSV. Replace the ‘Missing’ strings with empty strings and translate the percentage to a decimal. Use the input file headers to decide what fields are repetitive (and therefore can be omitted). Stop processing the file when the first field of the line is “—”.

The prompt was edited both since there is a 600-character limit in Colaboratory and because, with the original phrasing, the code generator assigned fields incorrectly. The current wording got around both problems:

# prompt: The file provided here (Illinois_weather_1979-2011.txt) is for the

# state of Illinois from 1979 to 2011. Write code to process this file

# to extract the data for Chicago (Cook County) into a single CSV.

# Replace the 'Missing' strings with empty strings and translate the

# percentage to a decimal. Use the input file headers to decide what

# fields are repetitive (and therefore can be omitted). Stop processing

# the file when the first field of the line is "---".

import csv

def process_illinois_weather_data():

"""Processes the Illinois weather data file and extracts Chicago data into a CSV."""

with open("Illinois_weather_1979-2011.txt", "r") as infile, open(

"chicago_weather.csv", "w", newline=""

) as outfile:

reader = csv.reader(infile, delimiter="\t")

writer = csv.writer(outfile)

header = next(reader)

writer.writerow(header)

for row in reader:

if row[0] == "---":

break

if "Cook" in row[3]:

new_row = []

for cell in row:

if cell == "Missing":

new_row.append("")

elif "%" in cell:

new_row.append(str(float(cell.replace("%", "")) / 100))

else:

new_row.append(cell)

writer.writerow(new_row)

process_illinois_weather_data()

print("Chicago weather data extracted to chicago_weather.csv")This approach is a bit different. While it preserves all of the fields just as the Copilot version did, it takes a different approach to processing a row. Rather than changing fields in a row, it instead processes each field in a row and then adds that field to a new row, which it writes to the file when the processing for the row is complete. This approach is arguably slightly less efficient since it creates a new list for each row, but in practice, the performance hit is probably slight.

As with the Copilot version, the code as suggested will write a file with only the header row—and for a very similar reason: in checking for Cook County results, it checks to see if row[1] == “Cook”. This is wrong both because the value would be “Cook County, IL”, as mentioned earlier, and because it checks the Month field, not the County field. The correction would be the same as before. The line should be

if 'Cook' in row[3]:Once that change is made, this code produces the correct result.

As mentioned, this task is fairly straightforward, and so it’s not as much of a challenge for the AI tools as some more complex tasks. The AI bots’ problems came in using the correct technique and field to check the county and in evaluating the fields for possible redundancy. Giving an explicit mapping and instructions in the prompt probably would have helped this, at the expense of making constructing the prompt more laborious.

Summary

- ETL is the process of getting data from one format, making sure that it’s consistent, and then putting it in a format you can use. ETL is the basic step in most data processing.

- Encoding can be problematic with text files, but Python lets you deal with some encoding problems when you load files.

- The most common way to handle a wide range of characters in simple text files is to encode them in the Unicode UTF-8 encoding.

- Delimited and CSV files are common, and the best way to handle them is with the csv module.

- Spreadsheet files (in Excel format) can be more complex than CSV files but can be read and written with third-party libraries.

- Currency symbols, punctuation, and null characters are among the most common data cleaning concerns; be on the watch for them.

- Cleaning and presorting your data file can make other steps faster.

- Writing data in the formats discussed is similar to reading them.

22 Data over the network

This chapter covers

- Fetching files via FTP/SFTP, SSH/SCP, and HTTPS

- Getting data via APIs

- Structured data file formats: JSON and XML

- Scraping data

You’ve seen how to deal with text-based data files. In this chapter, you use Python to move data files over the network. In some cases, those files might be text or spreadsheet files, as discussed in chapter 21, but in other cases, they might be in more structured formats and served from REST or SOAP APIs. Sometimes getting the data may mean scraping it from a website. This chapter discusses all of these situations and shows some common use cases.

22.1 Fetching files

Before you can do anything with data files, you have to get them. Sometimes this process is very easy, such as manually downloading a single zip archive, or maybe the files have been pushed to your machine from somewhere else. Quite often, however, the process is more involved. Maybe a large number of files need to be retrieved from a remote server, files need to be retrieved regularly, or the retrieval process is sufficiently complex to be a pain to do manually. In any of those cases, you might well want to automate fetching the data files with Python.

First, I want to be clear that using a Python script isn’t the only way, or always the best way, to retrieve files. The following sidebar offers more explanation of the factors I consider when deciding whether to use a Python script for file retrieval. Assuming that using Python does make sense for your particular use case, however, this section illustrates some common patterns you might employ.

Although using Python to retrieve files can work very well, it’s not always the best choice. In making a decision, you might want to consider two things:

- Are simpler options available? Depending on your operating system and your experience, you may find that simple shell scripts and command-line tools are simpler and easier to configure. If you don’t have those tools available or aren’t comfortable using them (or the people who will be maintaining them aren’t comfortable with them), you may want to consider a Python script.

- Is the retrieval process complex or tightly coupled with processing? Although those situations are never desirable, they can occur. My rule these days is that if a shell script requires more than a few lines, or if I have to think hard about how to do something in a shell script, it’s probably time to switch to Python.

22.1.1 Using Python to fetch files from an FTP server

File transfer protocol (FTP) has been around for a very long time, but it’s still a simple and easy way to share files when security isn’t a huge concern. To access an FTP server in Python, you can use the ftplib module from the standard library. The steps to follow are straightforward: create an FTP object, connect to a server, and then log in with a username and password (or, quite commonly, with a username of “anonymous” and an empty password).

To continue working with weather data, you can connect to the National Oceanic and Atmospheric Administration FTP server, as shown here:

import ftplib

ftp = ftplib.FTP('tgftp.nws.noaa.gov')

ftp.login()

#> '230 Login successful.'When you’re connected, you can use the ftp object to list and change directories:

ftp.cwd('data')

#> '250 Directory successfully changed.'ftp.nlst()

#> ['climate', 'fnmoc', 'forecasts', 'hurricane_products', 'ls_SS_services',